Architecture overview

Table of contents

Data Classification

Elqano only indexes the documents made available to the service. The corresponding Sharepoints and Teams sites will be selected by the project team.

Documents indexation is done on a continuous basis - a daily task is performed to ensure that the document knowledge base is up to date.

The administrators (see below for role details) are the only people who can define the sites that can be accessed. They do so using the application admin interface.

The application uses an enterprise application in order to define the two app roles:

- User role

- Admin role

Users’ roles are then provided to the application when they authenticate themselves (the roles are shared using a custom assigned attribute.)

In short, regular users can access:

- To the application wall which is accessible:

- using directly the application web interface

- using the Teams wall tab

- To the Team chat tab

Architecture Security review

This section aims at reviewing application components’ security as well as all communication protocols and data encryption.

Components

This section information correspond to the product information as installed in the customer’s Azure tenant.

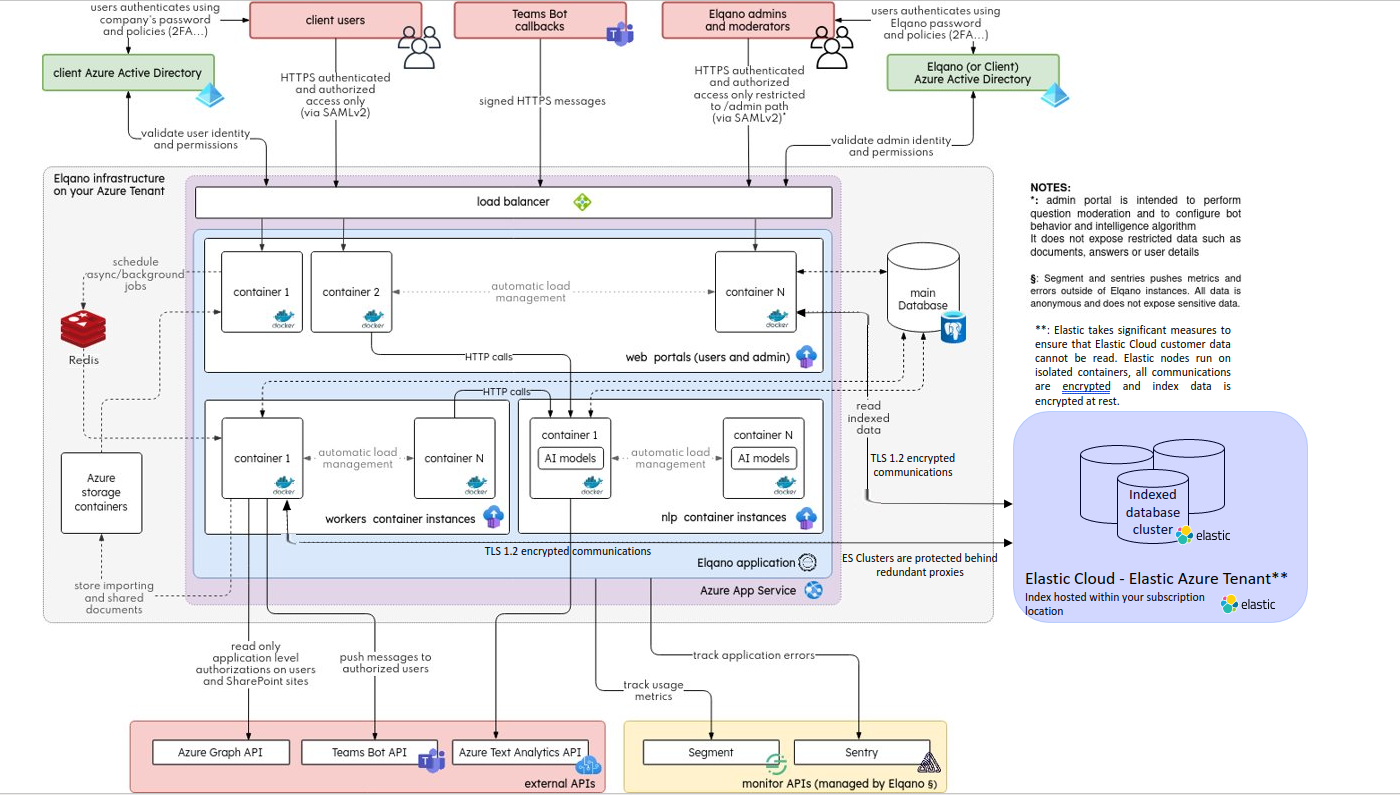

The following diagram represents the global architecture of the solution

All these technical components will be Azure managed services running in the Customer’s Azure tenant. These components are the core of the solution. These are its engine.

| component | Solutions | Recommended services |

|---|---|---|

| Web servers | Docker environment | Azure App Service (docker-compose app) |

| Database | PostgreSQL | Azure Database for PostgreSQL |

| Document index | ElasticSearch | Elastic Cloud (Elasticsearch managed service) |

| Storing components secrets | Key vault | Azure Keyvault |

| Storage For document processing | Azure Storage account | |

| Queue For asynchronous tasks | Redis | Azure Cache for Redis |

Note: The Elastic index is hosted in Elastic Azure tenant in the Elastic subscription location. Elastic takes significative measures to ensure the hosted data security. To this end, Elastic uses isolated containers, encrypted communications and encrypted databases. Fore more details, see https://www.elastic.co/cloud/security.

Version update, log management and load management will be handled by the services.

Elastic Search

Elqano is using ElasticSearch and Elastic Enterprise Search as a document index via Elastic Cloud (Elasticsearch managed service) service.

It is a Saas service provided by Elastic and hosted in the same Azure location of the application.

Access is authenticated with a private key and uses HTTPS for all queries

The access to the management console of the service is restricted to customer’s admins with administrative privileges.

The access to the Elastic index is addtionnally protected thanks to a firewall which allows only App Service IPs.

The role of this component is to store an indexed set of all textual content of the documents as well as questions and answers to use it as a source for the Artificial Intelligence algorithms.

Postgres SQL

Elqano is using PostgreSQL as the main database engine via Azure Database for PostgreSQL.

Access is authenticated via credentials and uses SSL encryption for all queries.

The access is addtionnally protected thanks to a firewall which allows only App Service IPs.

The role of this component is to store all application data (user profiles, data-sources configuration, Question and Answers…)

Storage

Elqano is using an Azure Storage account to:

- temporarily store the document during indexation

- store admin reports

- store uploaded-from-computers files (if the feature is enabled).

It will contain two blob containers:

- The document collection to temporary store new documents during the indexing process.

- The report collection to store generated statistics reports

Access is authenticated with a private key and uses HTTPS for all queries.

It is possible to addtionnally protect the Azure Storage thanks to a firewall which allows only App Service IPs and users ones. Please note that adding an extra firewall requires having access to users IPs (if not the admins will not have access to usage reports and if the feature is enabled; the users will not have access to uploaded-from-computer files) and to locate the azure storage and the app service in two distinc azure locations (if not the app service uses azure backbone network for accessing the azure storage). Thus, it is recommended to do so only if users use VPN.

The access to uploaded-from-computers documents is restricted to authenticated users only. The authentication is a SSO authentication via Azure AD using SAMLv2 protocol for user requests.

App Service

The application uses a azure docker compose app service to execute tasks and respond to users’ demands. The app service executes and runs docker containers.

Elqanoqa

It is the application web server.

This service content is accessible from Microsoft Teams and the internet to authenticated users via SSO.

This service allows only HTTPS via TLS 1.2 requests. And the Teams integration is done using TLS 1.2 callbacks.

Elqanoqa_worker

Background processor. This service does not expose HTTP ports, it only consumes scheduled tasks form the Redis queues.

Elqanoqa_clock

Small service responsible to schedule recuring tasks. It only enqueue cron jobs into the Redis queue to process them via the worker.

Nlp

Artificial Intelligence engine. Connect to the data-sources to provide the AI features of Elqano.

Environment variables

Other components’ access keys and URLs are stored in the App service configurations (stored as environement variables which are accessible to the containers only and to people with access to the app service).

Redis

Elqano is using Redis via Azure Cache for Redis servers as message queue for async task processing and as a cache system.

Access is authenticated with access keys and uses HTTPS for all queries.

It is restricted to Elqano application only via an IP address filtering (firewall).

The role of this component is to store cache for recuring process as well as scheduled background task. The cached data is used by the application to support the indexation process, it contains no sensitive data. It has details like task names, ids and is used during resource demanding timeslots.

Sharepoint

Elqano will connect to Sharepoint through the Microsoft Graph API to retrieve Sharepoint sites’ documents.

The Microsoft Graph API will only be allowed to index specific SharePoint sites.

Network

The following network connections need to be established and maintained. In all areas where TLS 1.2+ is mentioned, it will mean TLS 1.2 and above only.

Client Access on Web interface / Teams HTTP callback

User will connect to Elqano web interface.

- Data: Access all resources exposed by the web application

- Protocol : HTTPS

- Ports: 443

- Authentication:

- SSO via Azure AD using SAMLv2 protocol for user requests

- Teams Signature validation for chat requests

- Encryption: TLS 1.2+

Elqano to Teams bot AI

Application server will push messages to Teams and retrieve Teams data

- Data: Message to users

- Protocol: HTTPS

- Ports: 443

- Authentication: OAuth2 tokens

- Encryption: TLS 1.2+

Elqano to Azure Graph API

Application server will connect to Graph API to retrieve customer’s data

- Data: Read only access to documents and user profiles

- Protocol : HTTPS

- Ports : 443

- Authentication : OAuth2 tokens

- Encryption: TLS 1.2+

Load balancer to elqanoqa

Web application server

- Data: Access all resources exposed by the web application

- Protocol : HTTP over internal network

- Ports : 80

- Authentication : None

- Encryption: None

Internal docker network communications

App service containers will have to communicate with each other

- Data: All application internal data

- Protocol : HTTP over internal network

- Ports : 80

- Authentication: Aucun

- Encryption: Aucun

Note: This container-to-container communication is handled internally by the Azure App service component itself. In this case, it is an Azure custom implementation of docker-compose. The Microsoft documentation gives more details about it: https://docs.microsoft.com/en-us/azure/app-service/configure-custom-container?pivots=container-linux#configure-multi-container-apps

As it uses an internal network, it is completely isolated from the outside world.

Elqano to Postgres database

Application servers will have to connect to the Postgres database

- Data: All application data stored in database

- Protocol: Postgres protocol (TCP message protocol)

- Ports : 5432

- Authentication: Postgres username/password authentication

- Encryption: Postgres SSL encryption

Elqano to Elasticsearch

Application servers will have to connect to the Elasticsearch index to query for documents or question and answers.

- Data: Document textual content, question and answers and search queries

- Protocol : HTTPS

- Ports : 443

- Authentication: Elasticsearch private key

- Encryption: TLS 1.2+

Elqano to Azure storage

Application server will have to connect to Azure Storage to store and serve documents.

- Data: Documents waiting for indexing. Documents shared between users. Admin reports

- Protocol : HTTPS

- Ports : 443

- Authentication: Access Key

- Chiffrement : TLS 1.2+

Elqano to Redis Server

Application servers will have to exchange messages via Redis database

- Data: Job class to process and resource ID (no sensitive data is exchanged via Redis)

- Protocol : RESP (TCP REdis Serialization Protocol)

- Ports : 6380

- Authentication: Access Key

- Chiffrement : TLS 1.2+

Encryption

Multiple considerations and controls will be included to ensure data security both in transit and at rest.

At transit encryption

Encrypted communications are used when systems communicate over the network. Connections following HTTPS protocal and TLS 1.2+ encrypted:

- User access to the web application

- Teams bot callback sent to the application server

- Application server connections to Azure Graph API

- Application server connections to Teams Bot API

- Application server connections to ElasticSearch

- Application server connections to Azure Storage

Encrypted communications following RESP protocol and Postgres SSL encrypted:

- Application server connections to Postgres

- Application server connections to Redis

At rest encryption

Elqano application does not globally encrypt data in its datastore.

Postgres

The Azure Database for PostgreSQL service uses the FIPS 140-2 validated cryptographic module for storage encryption of data at-rest. Data, including backups, are encrypted on disk, including the temporary files created while running queries. The service uses the AES 256-bit cipher included in Azure storage encryption, and the keys are system managed. Storage encryption is always on and can’t be disabled. For more details see https://docs.microsoft.com/en-us/azure/postgresql/single-server/concepts-security.

Redis

Redis database is not used as a persistent storage and does not keep any sensitive values. Only scheduled tasks and resource identifiers are stored temporary for exchange between the web and worker instances.

Redis cannot be encrypted as it is in memory only.

Reference: https://techcommunity.microsoft.com/t5/azure-paas-blog/encryption-on-azure-cache-for-redis/ba-p/1800449#:~:text=Encryption%20at%20Rest,way%2C%20having%20the%20same%20exposure.

Elasticsearch

Elastic is encrypted at rest by default by the infrastructure. Elasticsearch index contains the text content of the documents as well as the questions and answers. These data cannot be encrypted as the application need to be able to perform search queries on it.

Azure storage

All files stored in the Azure Storage are encrypted at REST. The encryption is Azure managed.

Authentication management

Teams

Access and authentication to the chat in Teams is controlled by MS Teams application. The Chat application will only validate user permissions via Azure Active Directory.

The MS Teams app has an additional feature “Wall” that replicates the wall feature in the webapp, to provide for the user’s personalized wall content to be displayed on the MS Teams bot the SSO application is configured to accept credentials from the MS Teams app.

Web portal

The single sign on access to the web application is handled by Azure Active Directory using SAMLv2 authentication and authorization.

The permissions to connect to Elqano web portal will be managed in Azure AD by assigning users or groups to the configured Enterprise application.

Some users will have specific “super user” permission to access and moderate the questions and answers exchanged via the application. These permissions will be assigned into Elqano admin section itself.

The admins will be identified by the project team.

Access Keys

Graph API

The access keys are encrypted and stored directly in the Postgres Database. If needed, it is possible to update them using the admin interface.

Elqano requires access to Azure Graph API for different purposes:

- Access to SharePoint Online documents for indexation

- Read user profile

- Onboard users

These permissions require some privilege:

- Sites.Read.All permission – read access to sites & documents

- User.Read.All permission – read access to users data

- OpenId permission – enableTeams wall authentication

- User.Read permission – enable to read user information

- TeamsAppInstallation.ReadWriteForUser.All permission – onboard users

Teams Bot API

The application requires an access to the Teams Bot API. This api is used to send messages to the users in Teams.

This API is the only one requiring write permissions, but the scope is limited to users authorized to use the Bot application in Teams.

The application connects to the API using an OAuth2 application flow. The access keys are encrypted and stored directly in the Postgres Database.

Other API keys

There is no other API key used. The other services using access keys are:

- Elastic Search

- Azure Storage

As a reminder PostgreSQL uses an username/password authentication.

All the other keys or credentials are stored in the Azure App Service configuration as environement variables.

Offboarded users management

The application checks on a daily basis if users are still registered in the client’s Azure Active Directory. If not, offboarded users will be automatically blacklisted and exluded from the list of potential authors. Thus, is a user leaves the company he or she will excluded from the application within 24 hours following the deletion of his/her Azure account.

Data replication

Data replication and recovery is handled by Azure and Elastic PaaS services.

All data remain on the client’s Azure tenant except for the Elastic Index even though the elastic data are readable by the Elqano app only and remain manageable by the client only.

Azure Database for PostgreSQL

Default backup retention duration is 7 days, this value can be configured in the service. Replication is also possible and fully handled by the service.

Elasticsearch

Elastic service is creating snapshots automatically and can restore them at any time. The data is automatically distributed across 2 indexes in the cluster.

The data of the cluster can be rebuilt from PostgreSQL data at any point.